New to Rust? Grab our free Rust for Beginners eBook Get it free →

Pandas- Data Frames and Uploading Files

Pandas (PANel Data Analysis) is a popular library when it comes to data analysis and machine learning. Pandas library is built on top of Numpy. It is very complex to handle data in Numpy before the evolution of the pandas’ Data frame. Numpy is a matrix array that does indexing like 0, 1, 2, 3…… This makes it difficult to call columns based on the index.

For example, you want to fetch data of the country column (column no 5) in a given data file. So, you need to remember column number (index 4) always in case you are using Numpy.

But, with the emergence of the pandas’ Data frame, indexing is done based on the column name (or custom index). So, it becomes easy to fetch data based on column names like “country”. The analyst does not need to remember the index like 0, 1, 2. This is the benefit of the pandas’ data frame. Pandas’ data frame also offers the below benefits:

- Solves the usability of the Numpy problem.

- It can read, write data in CSV, text, excel, and JSON formats.

- It provides fast and efficient data manipulation.

- It can handle incomplete or missing data.

Pandas offer two types of data sets. First is “Series” is a one-dimensional array and this also solves the index issue of Numpy. The second is “Data Frame” which is a two-dimensional tabular format data structure.

Let’s see how a custom index can be defined in the series data set. There are 2 lists of countries and capitals. Series can be created with these two lists. The first declaration sets the default index as Numpy. The second declaration shows how to define a custom index as “countries”. So now capitals data will have a custom index as countries can be accessed as well.

import numpy as np

import pandas as pd

countries=['India','USA','Qatar']

capitals=['New Delhi','Washington DC','Doha']

pd.Series(data=capitals)

custom_series=pd.Series(data=capitals,index=countries)

custom_series['Qatar']

This is the beauty of pandas data structure as you can accessing data based on a custom index like custom_series[‘Qatar’] instead of accessing data with a default index like [0], [2]. This is why the pandas library has become a favorite of data engineers, scientists, and machine learning guys.

Now, the limitation of Series is that it is one dimensional. But, in the real world, we want data in a two-dimensional format. So, the answer is Data Frames. Let’s move on to Data Frames. Data Frames stores any data types like int, string, float, boolean in tabular format. It offers a lot of mathematical functions and flexibility. Let’s see how data frames look like:

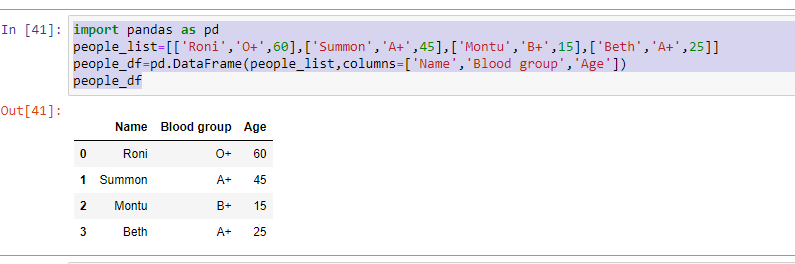

import pandas as pd

people_list=[['Roni','O+',60],['Summon','A+',45],['Montu','B+',15],['Beth','A+',25]]

people_df=pd.DataFrame(people_list,columns=['Name','Blood group','Age'])

people_df

Output:

Here, you can see the column name appears on top of each column as Name, Blood group, Age instead of 0,1,2.

As an analyst, you want to fetch only the Name from this data set as of now. So, you are not required to remember the position of the column whether Name is stored on the second or third column. You only have to provide the name of the custom index. Nobody wants to remember the numbers. Right? Remembering a custom name is always easy for everyone. This is the benefit and flexibility that Data Frames provide.

people_df['Name']

Output:

0 Roni

1 Summon

2 Montu

3 Beth

So, this is all basics of data structures of pandas. A question may arise in your mind that we deal with huge data in the real world. Right? The data will not be always as small as we have seen now in form of lists. There must be a big text file, CSV files, or excel files where data resides. That data should be uploaded in Data Frames for further study. How to upload that big data file?

There are different file formats such as CSV, text file, excel file, JSON file. We will see now how to upload data from different file formats.

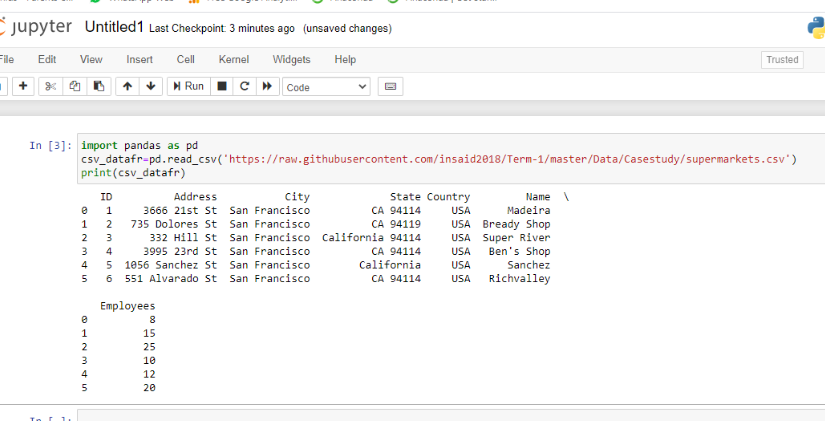

1. Uploading a CSV file:

: The CSV files are comma-separated files. Suppose, we have a sample CSV file as below and want to upload it. This sample file is stored on the given GitHub URL. You can choose any URL or your GitHub URL where the file is kept. Read_csv is the method to upload CSV file.

import pandas as pd

csv_datafr=pd.read_csv('URL')

print(csv_datafr)

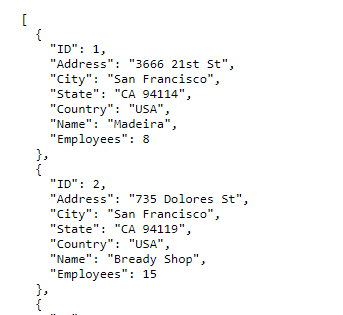

2. Uploading a JSON file:

JSON file format is popular as this is not dependent on the device. The JSON file format data can be handled on any device like android phones, iOS. Read_json is the function to upload JSON files. The sample JSON file is as below:

Below is the syntax to upload JSON file.

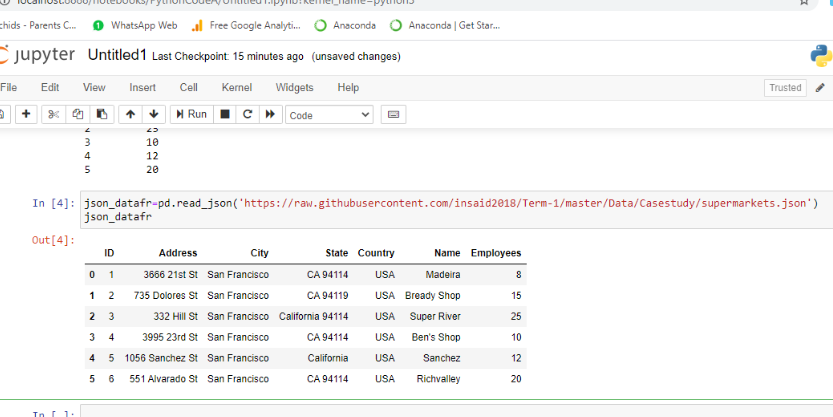

import pandas as pd

json_datafr=pd.read_json('URL')

json_datafr

Output:

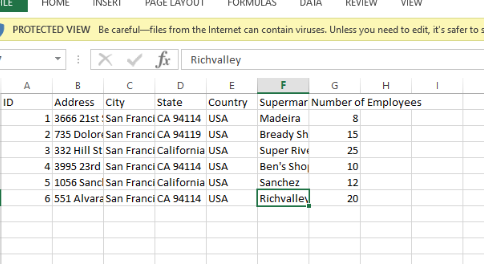

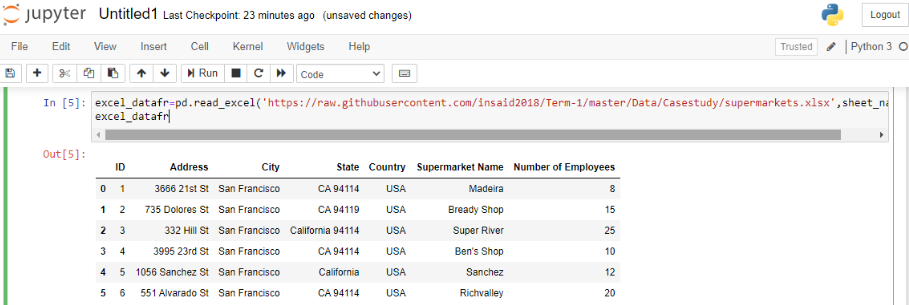

3. Uploading an Excel file:

Excel is also a source of huge data. The pandas library provides a read_excel method to upload an excel file. There is a parameter “sheet_name” which holds the sheet number which should be uploaded. For example, you want to upload the data of the first sheet of an excel then sheet_name will hold value 0. For the second sheet data upload, sheet_name will hold value 1.

you can use the below code:

excel_datafr=pd.read_excel('URL',sheet_name=0)

excel_datafr

4. Uploading a text file:

The method to upload a text file is the same as a CSV file but you need to provide sep parameter. The sep parameter stands for the separator of the text file. The column data is separated using either semi-colon (;) or comma (,) in a single row. The sep parameter will explain to pandas which separator is used in a text file for different columns.

text_datafr=pd.read_csv('URL',sep=';')

text_datafr

The data is generally uploaded using a URL. However, you can upload data from the local machine as well. The data file should be placed inside the folder where the jupyter notebook is installed if you are using a jupyter notebook. If you are using some other IDE then your data files should be placed inside the folder where IDE is installed.

You can provide the path of your data file as well and provide the path instead of URL in the given method. This can be done using sample code as below :

path="C:\Users\Swapnil\localtext4.txt” #give your own local path

csvlocal_datafr=pd.read_csv(path, sep=',')

csvlocal_datafr

Pandas library also provides related methods to explore more about uploaded data. Suppose you want to just have a look at uploaded data whether it is uploaded correctly or not. In this case, there is no need to print the entire data frame, you can use the head method to get the first five rows.

Dataframe_variable.head()

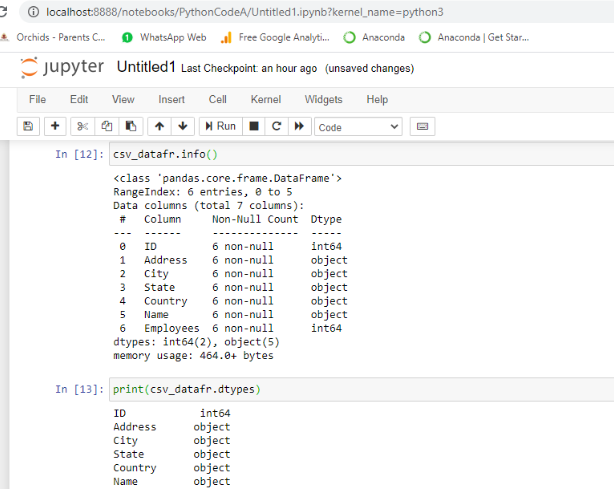

Similarly, the info method will provide the information regarding data type, values of non-null, or null value in a given column so that you can trace the count of missing values.

Dataframe_variable.info()

The result set as the above output screen will be displayed. There are many more functions and attributes like Shape and count to know the dimension and count of records.

You have seen how to upload files in pandas’ data frame. It is recommended to explore more using references to other articles and documentation. I hope you enjoyed uploading data into Data frames and setting custom dimensions.

Keep exploring more!