In this article, we are going to learn the basics of Apache Kafka and it’s core concepts. And yes, a bit of history too.

What is Kafka

Apache Kafka is an open source distributed streaming platform developed by LinkedIn and managed by the Apache software foundation.

Kafka is aimed to provide a high-throughput, low-latency, scalable, unified platform for handling real-time data streams.

Apache Kafka is based on the commit log principle i.e messages in Kafka goes for permanent record. Kafka allows pub/sub mechanism to produce and consume messages.

A bit of History

The name Kafka is inspired by the author Franz Kafka because of one of the developers of Kafka Jay Kreps like his work.

The individual behind the Kafka project created a new company called Confluent.

You can learn more about Franz Kafka here.

Architecture

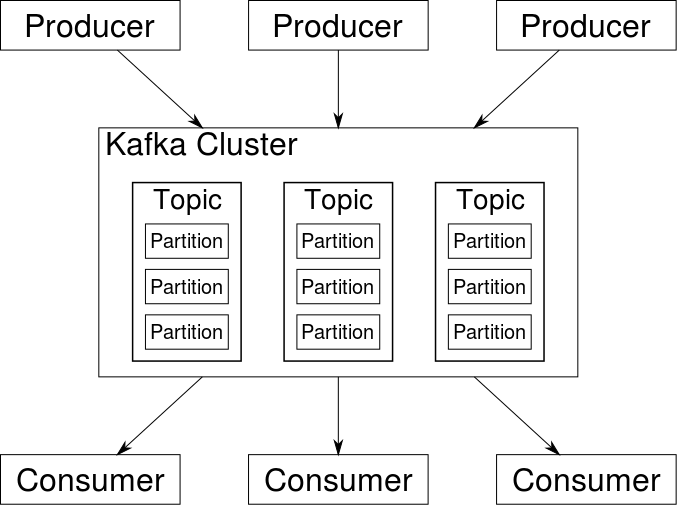

Here is the high-level view of Kafka architecture.

Kafka runs on a cluster of one or more servers which is called brokers in Kafka terminology. We have consumers and producers who read and writes messages (called logs) in Kafka terminology. We will learn more about Kafka topics and partition in the upcoming section.

There are 4 major APIs in Kafka:

- Producer API – Allows publishing stream in Kafka.

- Consumer API – Allows to subscribe and consume streams in Kafka.

- Connector API – Allows to import/export data from/to other systems.

- Streams API – Allows converting streams to output.

We are going to use Producer and consumer API a lot in upcoming tutorials.

Kafka Topics and Partitions

Kafka uses topics to store the logs (messages) and producer creates new messages in the topic and consumer reads the message from the topic.

Topics can be divided (partitioned) to scale and distribute data across various systems and can be replicated (copied) to the various system to handle failover.

Kafka achieves the amazing throughput using the partition and replica technique.

Why Zookeeper

Kafka uses zookeeper to facilitate the brokers with metadata about the processes running in the system and to provide health checking and broker leadership election. Zookeeper is like go to software when it comes to handling distributed application challenges.

Where can we use Kafka

Kafka can fill up the requirement of real-time data exchange. For example, Uber managing passenger and driver matching, real-time analytics, real-time exchange, e-commerce, tracking page views etc.

One of the popular uses of Kafka is in Microservices architecture applications where multiple micro applications are deployed on different server facilitate the communication using Kafka.

How to integrate Kafka in your code

Apache Kafka has released libraries for different programming languages. For example, listing below few libraries:

Python: https://github.com/confluentinc/confluent-kafka-python

Node: https://www.npmjs.com/package/no-kafka

PHP: https://github.com/EVODelavega/phpkafka

Ruby: https://github.com/zendesk/ruby-kafka

You can use Kafka integration in Java directly using org.apache.kafka class.

Conclusion

We studied what is Kafka and why do we need a stream processing framework. Kafka is really useful when developing a distributed application.

This article is a part of a series, check out other articles here:

1: What is Kafka

2: Setting Up Zookeeper Cluster for Kafka in AWS EC2

3: Setting up Multi-Broker Kafka in AWS EC2

4: Setting up Authentication in Multi-broker Kafka cluster in AWS EC2

5: Setting up Kafka management for Kafka cluster

6: Capacity Estimation for Kafka Cluster in production

7: Performance testing Kafka cluster