New to Rust? Grab our free Rust for Beginners eBook Get it free →

What Are Tokens in ChatGPT – A Simple Explanation

If you have ever used ChatGPT or heard about it, you might have come across the term “tokens”. Tokens are a fundamental part of how ChatGPT works, but what exactly are they?

In this article, we will break down everything you need to know about tokens in ChatGPT, from what they are to how they work, and why they matter. We will use simple language and examples to make it easy to understand.

Breaking Down Tokens – How ChatGPT Processes Text

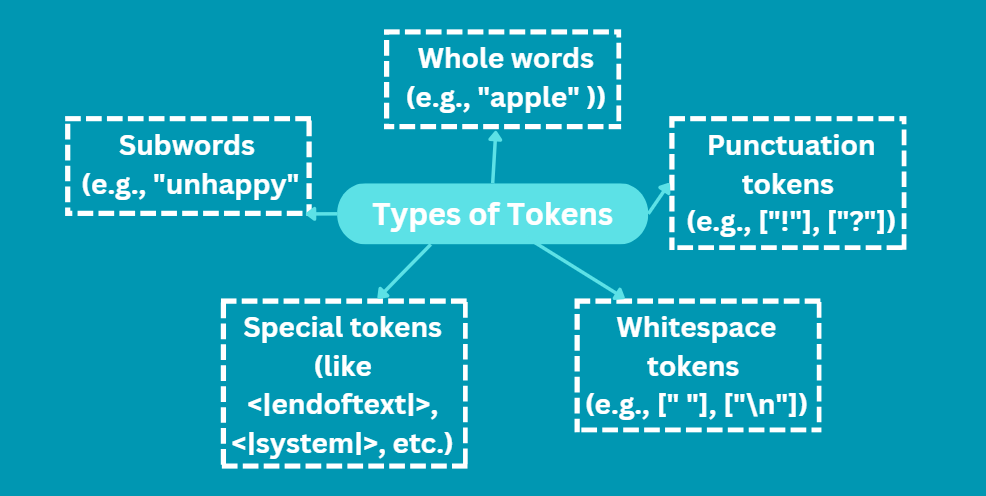

Tokens are basic text pieces that ChatGPT uses to understand and make language. They are small meaningful bocks found in a set of text.

When you type something in ChatGPT, it breaks it into tokens, and the same happens when ChatGPT answers you. Tokens can be as short as one character (such as ‘a’ or ‘!’ or over a word (such as chat or understanding).

For example: The word “cat” is one token. A punctuation mark like “!” is also one token.

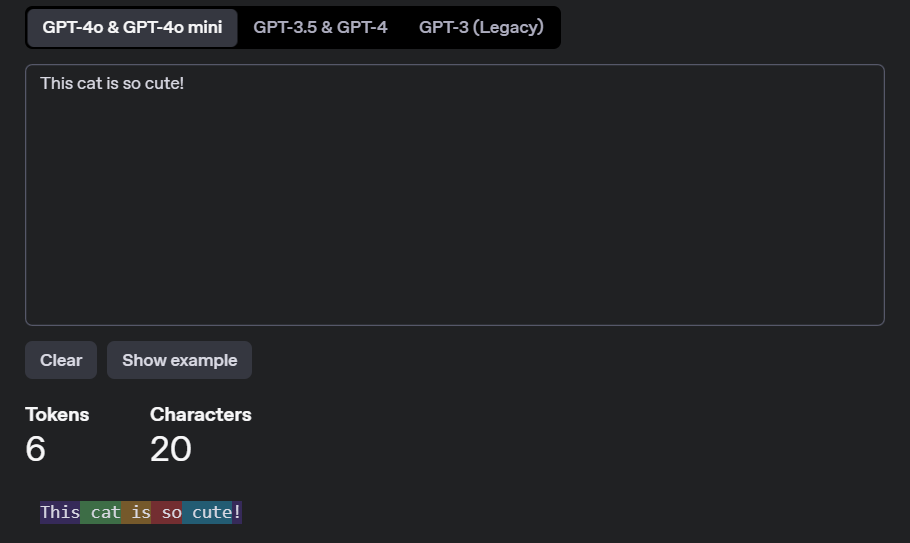

Let’s look at some more examples to understand tokens better:

- Single Words:

- “Hello” = 1 token

- “ChatGPT” = 1 token

- Punctuation:

- “,” = 1 token

- “!” = 1 token

- Common Phrases:

- “How are you?” = 4 tokens

- “I’m fine, thank you!” = 6 tokens

- Longer Sentences:

- “Artificial intelligence is amazing ⭐.” = 6 tokens(counting emoji as 1 token)

You can use the Chatgpt tokenizer to test for tokens.

Byte Pair Encoding (BPE) – Secret Behind Tokenization

To break text into tokens, ChatGPT uses a method called Byte Pair Encoding (BPE). BPE is a good way to split text into smaller pieces by finding the most common patterns in the language. Here’s how it works:

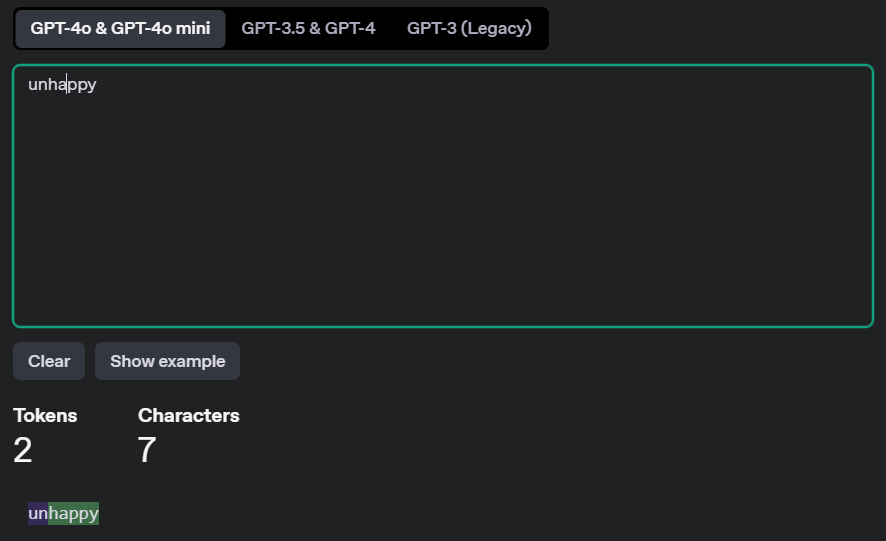

- It starts with characters: BPE splits the text into individual characters. For example, the word “unhappy” would initially be split into- [“u”, “n”, “h”, “a”, “p”, “p”, “y”].

- Then it finds common pairs: The algorithm looks for the most frequent adjacent character pairs and merges them into single tokens. Here “un” is a common pair, so it merges “u” and “n” into one token.

- This process is repeated, merging the most common subwords.“happy” is a common word, so it is merged next.

- The word “unhappy” is shown as two tokens.

How ChatGPT Uses Tokens to Process and Generate Text

The technology behind ChatGPT is the GPT (Generative Pre-trained Transformer) which processes and generates text based on tokens. Here’s how it works step by step:

- ChatGPT Tokenizes Input Text: When you type something, ChatGPT segregates it into tokens. For example, the sentence “Hello, how are you?” might be split into tokens like this: [“Hello”, “,”, “how”, “are”, “you”, “?”].

- The tokens are processed: These tokens are fed into the model, and the model uses its training to work out what the context and meaning of your input is.

- Output Text is Generated: It predicts the most likely sequence of tokens to come after your input. Suppose you inquire, What is the weather like?, ChatGPT could convey tokens like [“The”, “weather”, “is”, “sunny”, “today”, “.”].

- Finally, these tokens are converted back to text and you’ll see that response on your screen.

As you can see, tokens aren’t always whole words. Sometimes, they’re parts of words, specially for longer or more complex terms.

How Many Tokens Can ChatGPT Handle

Depending on the version of the model you’re using, ChatGPT can handle a certain number of tokens. For example: GPT-3.5 can handle up to 4,096 tokens. Some versions of GPT-4 can take up to 8,192 tokens or even more. Your input and the model’s response are included in this limit. If you go over this, you’ll have to shorten your input or break it up into pieces.

How Tokens Impact the Cost of Using ChatGPT

If you are using ChatGPT via OpenAI’s API, you pay for the number of tokens used. Here’s how it works:

- Input Tokens: The tokens in your message.

- Output Tokens: The tokens in ChatGPT’s response.

For instance, if you send a message with 10 tokens and get a reply with 20 tokens, you will be charged 30 tokens in total. The price varies because it is determined by the model you are using.

You can use the following tips to manage tokens:

- When you are nearly at the token limit, try to make your inputs shorter and more concise.

- Don’t Repeat- Repeating the same information can eat up unnecessary tokens.

- Abbreviations- Abbreviations or shorter words can be used as a token saver.

- If the conversation is too long, split the conversation into several parts.

Why Tokens Matter in ChatGPT & AI Models

For several reasons, tokens are important:

- Breaking Text into Tokens: This helps ChatGPT to understand language better. It allows it to know context, grammar, and meaning.

- They limit the length of the inputs and outputs: ChatGPT has a maximum length of the input and output. For instance, GPT-3.5 can process up to 4,096 tokens. If your input is too long, you will have to shorten it.

- Cost: Using a paid ChatGPT version (OpenAI’s API) you’ll be billed per token. The more tokens you have in a conversation, the more it will cost.

Fun Fact: How Tokens Vary Across Different Languages

Did you know that tokens behave differently in different languages? For example:

- A token is usually between 4 characters in length on average in English, but common words may be single tokens.

- On average, a token is 3 characters long in Spanish, but this will depend on the structure of the word.

- The tokens in Chinese can correspond to a single character, but some words (like greetings) may be tokenized as a whole.

The same sentence will have different number of tokens, based on the structure of the language.

Conclusion

ChatGPT understands and generates text using tokens. As long as ChatGPT can break text into tokens, it processes language quickly, but you are also limited by how much you can say in a single question. Understanding how tokens work will help you use ChatGPT the right way: either to retain within your token limit, or to spend less, or alternatively to obtain the best results.

In time, every word, punctuation mark, and even parts of words also become tokens making that conversation happen, so next time you chat with ChatGPT, remember: every word, punctuation mark, and even parts of words working behind the scenes in order to complete the conversation!

If you are more into AI content, check out the following:

- DeepSeek vs ChatGPT: Which Is the Best AI Chatbot in 2025?

- Gemini vs ChatGPT: Free and Paid Version Comparison 2024

- Perplexity vs. ChatGPT: A Comprehensive AI Comparison 2024

Reference

https://stackoverflow.com/questions/75586733/chatgpt-token-limit